Self Driving Safety: Protecting Passengers or Pedestrians?

The day where we become regular passengers in self-driving cars. Hopefully in the future we would have gotten to the point that they become very mindful machines capable of doing their best for the common good. They would merge politely and watch for pedestrians in the crosswalk ultimately contributing to the keeping the roads safe.

But should these machines and systems prioritize passengers or outside pedestrians?

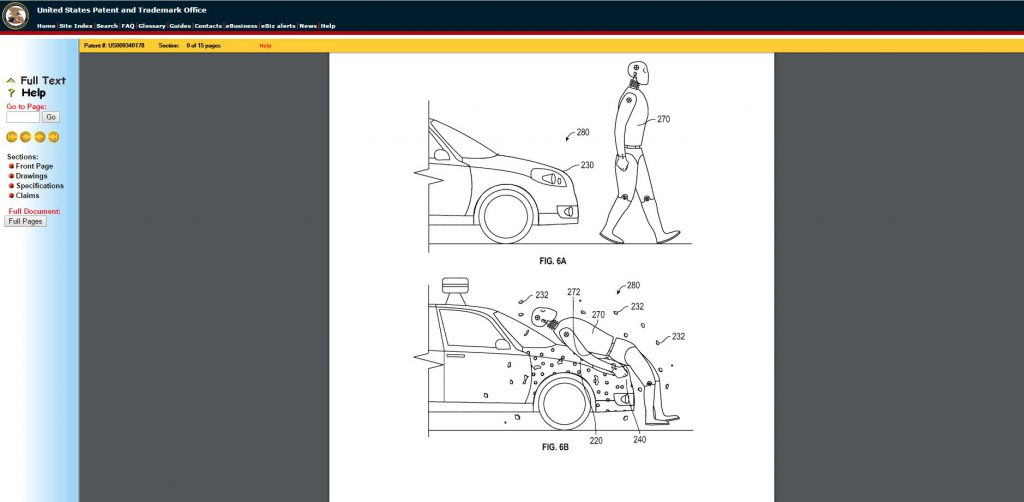

It’s a tough question because ideally such transportation means should be able to do both well and equally. However, there has been a recent research study that shows what people really want to ride in is an autonomous vehicle that puts its passengers first above all else. In other words if this hypothetical machine “brain” has to choose between slamming into a wall or running someone over, well, sorry, you get the idea.

What did the study tell us?

Last month’s Science magazine had an interesting piece about a group of computer scientists and psychologists explaining the six online surveys of United States residents last year between June and November that asked people how they believed autonomous vehicles should behave. These researchers proceeded to find that the majority of people included in their sample generally thought self-driving cars should be programmed to make decisions for the “greatest good.”

It was a study conducted through a series of quizzes that presented “unpalatable” options that amount to saving yourself or sacrificing yourself vs. saving the lives of fellow passengers who may be family members vs. saving others.

Ultimately the researchers found that people themselves would rather stay alive above all.

Now it is particularly telling that this dilemma of “robotic morality” has long been chewed on in science fiction media for what seems like forever. And now in 2016 it is become a common serious question for researchers working on things like autonomous vehicles who must, in essence, program “moral decisions” into a machine.

As autonomous vehicles and artificial intelligence are being developed more than they ever have been, this has also become a philosophical question with business implications: should manufacturers create machines with various degrees of morality programmed into them (which would be dependent on what a consumer wants)? Or should the government itself mandate that all self-driving cars share the same value of protecting some sort of greatest good, even if that’s not as good for a car’s passengers?

First let’s ask what exactly is the greatest good?

“Is it acceptable for an A.V. (autonomous vehicle) to avoid a motorcycle by swerving into a wall, considering that the probability of survival is greater for the passenger of the A.V., than for the rider of the motorcycle? Should A.V.s take the ages of the passengers and pedestrians into account?” wrote Jean-François Bonnefon, of the Toulouse School of Economics in France.

When you think about it, this discussion has been happening since the “trolley problem.” Philippa Foot, a British Philosopher, introduced this idea in 1967:

“Imagine a runaway trolley is barreling toward five workmen on the tracks. Their lives can be saved by a lever that would switch the trolley to another line. But there is one worker on those tracks as well. What is the correct thing to do?” (Engedget)

This is just the latest step in deliberating that question. This research is already taking autonomous vehicle manufacturers down a philosophical and legal rabbit hole and they are closer to launch date than ever before. The autonomous vehicle concept is such a new thing that it will in all likeliness take years to find definitive answers. Think about the legal ramifications: if a manufacturers offer different moral algorithms, and a buyer knowingly chose one of them over the others, is the buyer or manufacturer to blame for the harmful consequences of that version’s decisions?

Some researchers are currently making the case that teaching machines ethics may the wrong way to approach this:

“If you assume that the purpose of A.I. is to replace people, then you will need to teach the car ethics,” said Amitai Etzioni, a sociologist at George Washington University. “It should rather be a partnership between the human and the tool, and the person should be the one who provides ethical guidance.” (Engadget).

Autonomous cars are closer to the public than ever before especially with companies planning to launch pilot programs late this year, 2016. One thing is for sure: transportation is changing, but the question is what is it changing into.

More like this

-

Tech

TechUsing AI in the Future of Vehicle Inspections

Read MoreArtificial intelligence (AI) is everywhere at the moment. With the rise of Midjourney and ChatGPT, it’s been dominating the headlines for the last year or so, but these applications are..

-

Tech

TechThe Future of Extended Car Warranties: Exploring the Blockchain and Smart Contracts for Claims

Read MoreTechnology has changed many aspects of people’s daily lives. Things like phonebooks and VCRs have given way to devices and systems that make accessing information quicker and more convenient. Artificial..

-

News

NewsChatGPT May Revolutionize Automotive Infotainment and Technology

Read MoreSince the early days of the automobile, carmakers have sought to introduce ever-increasing levels of automotive technology to their offerings. General Motors gets credit for the first automatic transmission in..

Alex has worked in the automotive service industry for over 20 years. After graduating from one of the country’s top technical schools, he worked as a technician achieving a Master Technician certification. He also has experience as a service advisor and service manager. Read more about Alex.